Refine generative AI models with Reinforcement Learning from Human Feedback (RLHF) using expertly curated, human-labeled datasets that improve safety, alignment, and response quality.

Get a free bookTrusted by global 50+ AI teams

Everything you need to deploy your app

We adapt quickly to your evolving project needs. Whether you're launching a proof of concept or scaling to millions of annotations, our agile workflow ensures speed, flexibility, and consistent quality — without the bottlenecks

Your data is your asset — and we treat it that way. We follow strict data privacy protocols, secure infrastructure practices, and industry-grade compliance standards to ensure your information stays protected at every step.

From emerging startups to global enterprises, we offer scalable pricing models that align with your budget and goals. You get top-tier annotation quality — without the enterprise-only price tag.

A Complete Solution for AI SaaS Startups erent types of Industries annotation. erent types of Industries annotationerent types of Industries annotation. erent types of Industries annotationerent types of Industries annotation.

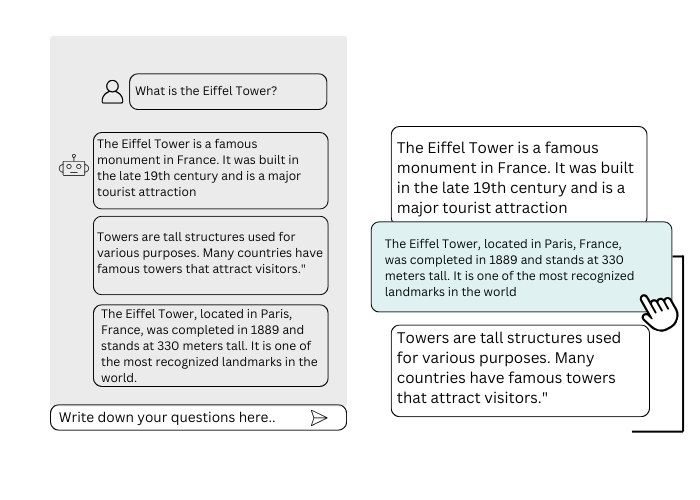

We create high-quality prompt-response pairs tailored to your domain and model objectives. Each dataset is designed to teach AI how to respond in a helpful, honest, and safe manner.

This forms the foundation of effective reinforcement learning from human feedback.

Our trained annotators evaluate AI outputs based on clarity, accuracy, and user intent. This human-led scoring process helps identify which completions align best with expected behavior.

The evaluations serve as crucial reward signals in model fine-tuning.

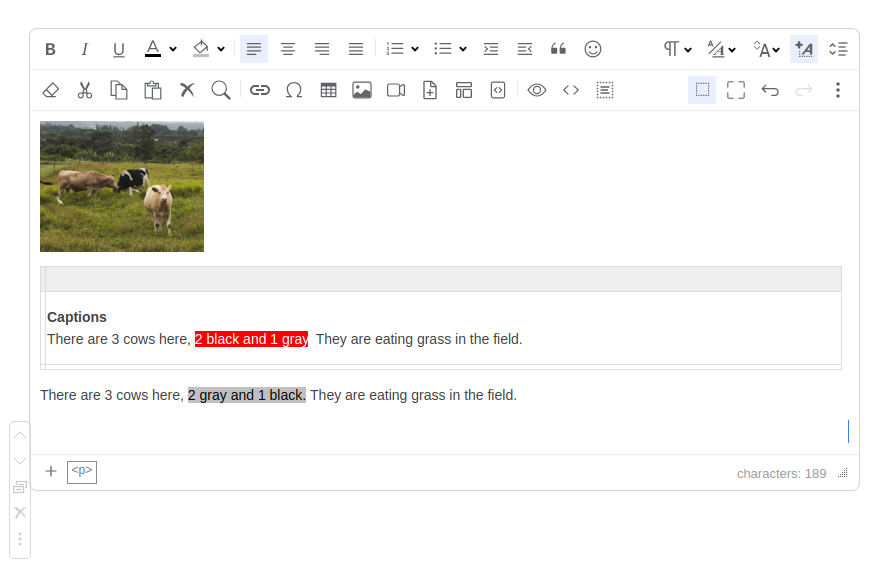

We ensure data accuracy through layered review, annotator alignment, and consensus validation. Strict QA workflows eliminate inconsistencies and reduce subjective bias in feedback.

Only high-confidence outputs make it into your training pipelines.

Low-quality or harmful outputs are identified, flagged, and rewritten by expert reviewers. Corrections help steer the model away from unsafe or misleading behaviors.

This iterative refinement strengthens the model’s reliability and trustworthiness.

Our clients share how Intellisane AI’s precise and reliable annotation services boosted their AI projects, showcasing our commitment to quality and trust.

Intellisane AI played a key role in helping us reach 97% accuracy in automating foundation layout detection. Their deep understanding of spatial data and labeling precision brought measurable improvements to our AI pipeline. The team was communicative, detail-oriented, and delivered everything ahead of schedule.S. RagavanSr. ML Engineer

Our fashion AI model required pixel-level segmentation across 72 garment categories—and Intellisane AI handled it flawlessly. They quickly adapted to our complex annotation guidelines and delivered consistent, high-quality labels at scale. Their domain focus, speed, and attention to visual detail were exactly what we needed.Valerio ColamatteoSr. AI Scientist & Team Lead

For our ADAS project, Intellisane AI delivered precise vehicle annotations across diverse traffic scenes, including multiple object classes and occlusion scenarios. Their expertise in automotive data workflows and quality-first mindset helped us pass all validation checks, with timely delivery and professional communication throughout.Raphael LopezCo-Founder & CTO

Power your transportation and navigation solutions with the highest quality training data and accelerate ML developments.

Transform your manufacturing and robotics operations with our precise data annotation services, driving efficiency, enhancing safety, and powering your machine learning models for optimal performance.

Transform healthcare solutions through accurate and comprehensive medical data annotations, ensuring enhanced diagnostic capabilities and improved patient outcomes.

Enhances the capabilities of AI systems with best-quality training data to monitor crop health, predict yields, and automate processes, ultimately driving efficiency and sustainability in the agricultural sector.

RLHF aligns generative AI with human values by training models using real human feedback—improving relevance, safety, and output quality.

Keep up to date with everything about our data annotations